In our final activity, I took a video of a moving object and tried to extract its position frame by frame to derive a physical variable. The object I used with my group mate Ryan is a black basketball dropped from a certain height:

The above animation is slower than the real kinematic event. First, I extracted each frame from the video using the application Free Video to JPG Converter. Then, I tried to extract the ball from the image using Non-Parametric Estimation or Histogram backprojection (Image Segmentation). This is done by selecting a region of interest, taking its histogram in normalized chromaticity coordinates, and then backprojecting from the histogram to acquire the segmented image. I cropped a part of the ball in the image to use as a region of interest:

After cropping each frame to get a part where the ball appears, I segmented each frame individually, and got:

![]()

We can see that the ball was not successfully extracted. This is because of the white lines around the ball and some parts of the background that are also dark, which the segmentation process selects to be similar to the region of interest. I tried to get a clearer image of the ball using a closing morphological operator. Using a structure element with a radius of 20 pixels, I got:

![]()

We can see that the ball looks solid and filled for most frames, but it no longer looks like a circle. It is hard to apply color segmentation and morphological operations on our video to extract the ball because the color of the ball isn’t the same all throughout its body and because some objects in the background do not have high contrast with the ball.

Since these methods cannot work, I tried to extract the ball by converting the image to grayscale and do a simple segmentation by threshold (Image Segmentation). This was done by simply selecting pixel values greater than a threshold value of 50 and turning these values to 1, while turning all other values to 0. I got the result:

![]()

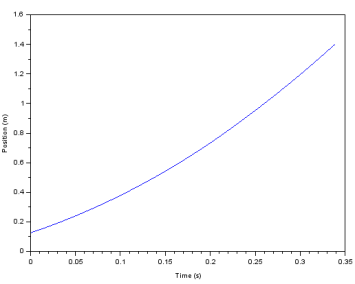

This looks better than the previous segmented images. From these processed frames, I tried to calculate the acceleration of gravity. For each frame, I calculated the y-coordinate of the centroid of the ball by taking the mean of all pixel locations of the extracted ball in the vertical axis. Before taking the video, I measured the length of the gray rectangle on the right side of the ball so I can convert a number of pixels to physical units. I got a measurement of 66 cm for 314 pixels and got a conversion value of 0.21 cm/pixel. Using this, I calculated the physical position of the ball in meters from the centroid y-coordinates:

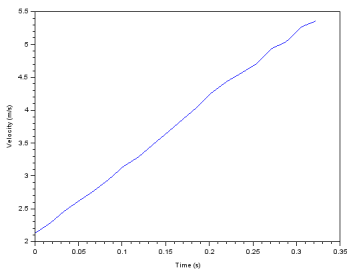

I calculated the time difference for each frame from the video frame rate of 59 frames per second. The time between each frame is then just 1/59 seconds. I took the derivative of the positions to get the velocity of the ball, and got:

We can see that it almost looks linear, which is expected since the ball is supposed to have a constant acceleration, which is the acceleration due to gravity. I calculated the slope of the velocity versus time plot and got a value of 10.32 m/s^2 which yields a 5.20% error with the known value of acceleration due to gravity of 9.81 m/s^2. This error could be due to the white lines around the ball which can shift the centroid upwards.

Since I needed to resort to segmentation by threshold instead of using color segmentation, I’ll rate myself with 9/10. The results could have been better and color segmentation would have been applicable if we had a video with a solid colored object all throughout and a background with high contrast.

I’d like to thank Dr. Soriano for all the fun activities in our class this semester. I enjoyed all activities and I’m sure my classmates did as well. I feel relieved and at the same time sad, but at last, we’re finished with all activities:

Reference:

M. Soriano, “A9 – Basic Video Processing,” Applied Physics 186, National Institute of Physics, 2015.